Robust Point Cloud Classification Based on Multi-Level Semantic Relationships for Urban Scenes

Abstract

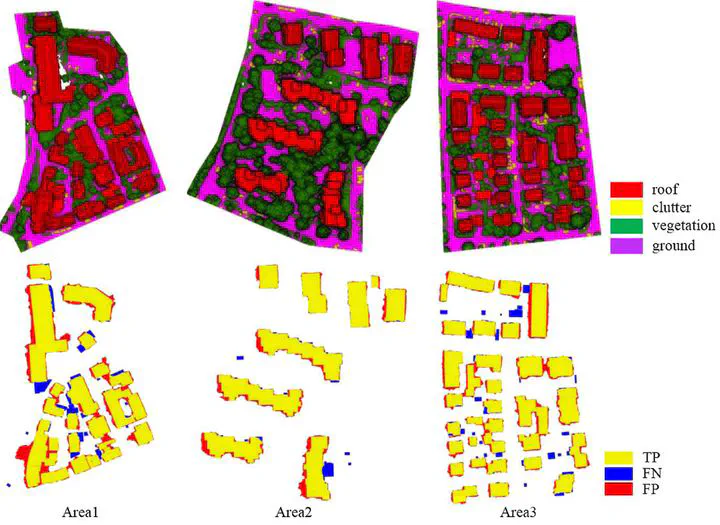

The semantic classification of point clouds is a fundamental part of three-dimensional urban reconstruction. For datasets with high spatial resolution but significantly more noises, a general trend is to exploit more contexture information to surmount the decrease of discrimination of features for classification. However, previous works on adoption of contexture information are either too restrictive or only in a small region and in this paper, we propose a point cloud classification method based on multi-level semantic relationships, including point– homogeneity, supervoxel– adjacency and class– knowledge constraints, which is more versatile and incrementally propagate the classification cues from individual points to the object level and formulate them as a graphical model. The point– homogeneity constraint clusters points with similar geometric and radiometric properties into regular-shaped supervoxels that correspond to the vertices in the graphical model. The supervoxel– adjacency constraint contributes to the pairwise interactions by providing explicit adjacent relationships between supervoxels. The class– knowledge constraint operates at the object level based on semantic rules, guaranteeing the classification correctness of supervoxel clusters at that level. International Society of Photogrammetry and Remote Sensing (ISPRS) benchmark tests have shown that the proposed method achieves state-of-the-art performance with an average per-area completeness and correctness of 93.88% and 95.78%, respectively. The evaluation of classification of photogrammetric point clouds and DSM generated from aerial imagery confirms the method’s reliability in several challenging urban scenes.

Publication

ISPRS journal of photogrammetry and remote sensing