Semantic Image Translation for Repairing the Texture Defects of Building Models

Dec 2, 2023·

,

,

,

,

·

0 min read

,

,

,

,

·

0 min read

Qisen Shang

Han Hu

Haojia Yu

Bo Xu

Libin Wang

Qing Zhu

Abstract

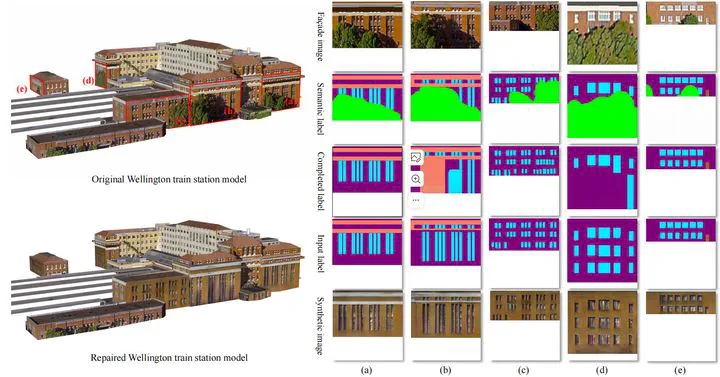

The accurate representation of 3D building models in urban environments is significantly hindered by challenges such as texture occlusion, blurring, and missing details, which are difficult to mitigate through standard photogrammetric texture mapping pipelines. Current image completion methods often struggle to produce structured results and effectively handle the intricate nature of highly-structured façade textures with diverse architectural styles. Furthermore, existing image synthesis methods encounter difficulties in preserving high-frequency details and artificial regular structures, which are essential for achieving realistic façade texture synthesis. To address these challenges, we introduce a novel approach for synthesizing façade texture images that authentically reflect the architectural style from a structured label map, guided by a ground-truth façade image. In order to preserve fine details and regular structures, we propose a regularity-aware multi-domain method that capitalizes on frequency information and corner maps. We also incorporate SEAN blocks into our generator to enable versatile style transfer. To generate plausible structured images without undesirable regions, we employ image completion techniques to remove occlusions according to semantics prior to image inference. Our proposed method is also capable of synthesizing texture images with specific styles for façades that lack pre-existing textures, using manually annotated labels. Experimental results on publicly available façade image and 3D model datasets demonstrate that our method yields superior results and effectively addresses issues associated with flawed textures. The code and datasets are available at the website.

Publication

IEEE Transactions on Geoscience and Remote Sensing