V2PNet: Voxel-to-Point Feature Propagation and Fusion that Improves Feature Representation for Point Cloud Registration

Apr 1, 2023·

,

,

,

,

,

·

0 min read

,

,

,

,

,

·

0 min read

Han Hu

Yongkuo Hou

Yulin Ding

Guoqiang Pan

Min Chen

Xuming Ge

Abstract

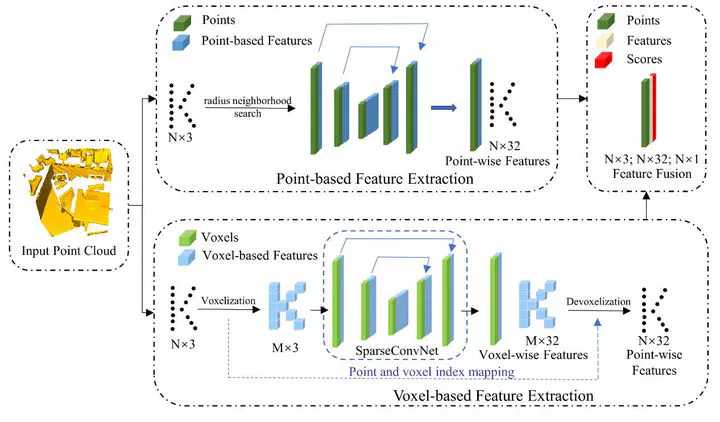

Point-based and voxel-based methods can learn the local features of point clouds. However, although point-based methods are geometrically precise, the discrete nature of point clouds negatively affects feature learning performance. Moreover, although voxel-based methods can exploit the learning power of convolutional neural networks, their resolution and detail extraction may be inadequate. Therefore, in this study, point-based and voxel-based methods are combined to enhance localization precision and matching distinctiveness. The core procedure is embodied in V2PNet, an innovative fused neural network that we design to perform voxel-to-pixel propagation and fusion, which seamlessly integrates the two encoder-decoder branches. Experiments are conducted on indoor and outdoor benchmark datasets with different platforms and sensors, i.e., the 3DMatch and KITTI datasets, with the registration recall of 89.4% and the success rate of 99.86%, respectively. Qualita-tive and quantitative evaluations demonstrate that V2PNet has shown improvements in semantic awareness, geometric structure discernment, and other performance metrics. Codes are made public at https://github.com/houyongkuo/V2PNet.

Publication

IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing