Research Assistant

Faculty of Geosciences and Environmental Engineering

Southwest Jiaotong University

I'm now a Research Assistant in the Faculty of Geosciences and Environmental Engineering of the Southwest Jiaotong University in Chengdu, China. I was working as a Postdoctoral Fellow for the Purdue University (USA). during Nov.2017 to Jan.2019, under the supervision of Prof. Jie Shan. Before that, I have spent 10 years to study Photogrammetry and Remote Sensing in Wuhan University, where I have recieved my bachelor degree in the School of Geomatics and Geodesy from Sep.2007-Jun.2011 and Ph.D in the State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing from Sep.2011-Jun.2017 under the supervision of Prof. Wanshou Jiang.

I'm now focusing on 3D reconstruction of our planet earth globally and regionally using datasets collected from the satellites, aircrafts, drones, vehicles, backpacks and hand-held devices.

Faculty of Geosciences and Environmental Engineering

Southwest Jiaotong University

Civil Engineering

Purdue University, USA

State Key Laboratory of Surveying Mapping and Remote Sensing

Wuhan University

School of Geomatics and Geodesy

Wuhan University

Awarded to our paper "Urban Semantic 3D Reconstruction from Multiview Satellite Imagery"

The reconstruction of urban city remains a hot topic in the past 3 decades. Topic like digital city or smart city and applications developed basic on this have draw numerous attentions, range from the planning and management of the goverment to industry usage like the VR games and digital maps. The 3D vector models are the most important fundamental data source that directly determine the quality and cost of the whole system. Even when only consider the market within China, the total amount are estimated as "hundreds of billions". Though the data source for urban reconstruction can be various, current research mainly concentrate the processing of point cloud, either from LiDAR or matched by stereo image, and the observation platform may range from ground, aerial to satellite. As a result, our research will focus on the theory, algorithm and applications related to the processing of point clouds.

The data source we use:

Research on the hierarchical topology identification and model reconstruction of large area urban city from aerial point clouds, PI

DANESFIELD: DAta Nexus for Estimating Semantics and Fusing Inferred Exterior Layers in 3D, Join

3D Reconstruction of Natural Terrain and Man-made Objects from High-resolution Remote Sensing Image, Join

Aerial Photogrammetry and 3D Modeling of Overhead Power Transmission Lines and its Application in the Management of Power Transmission, Join

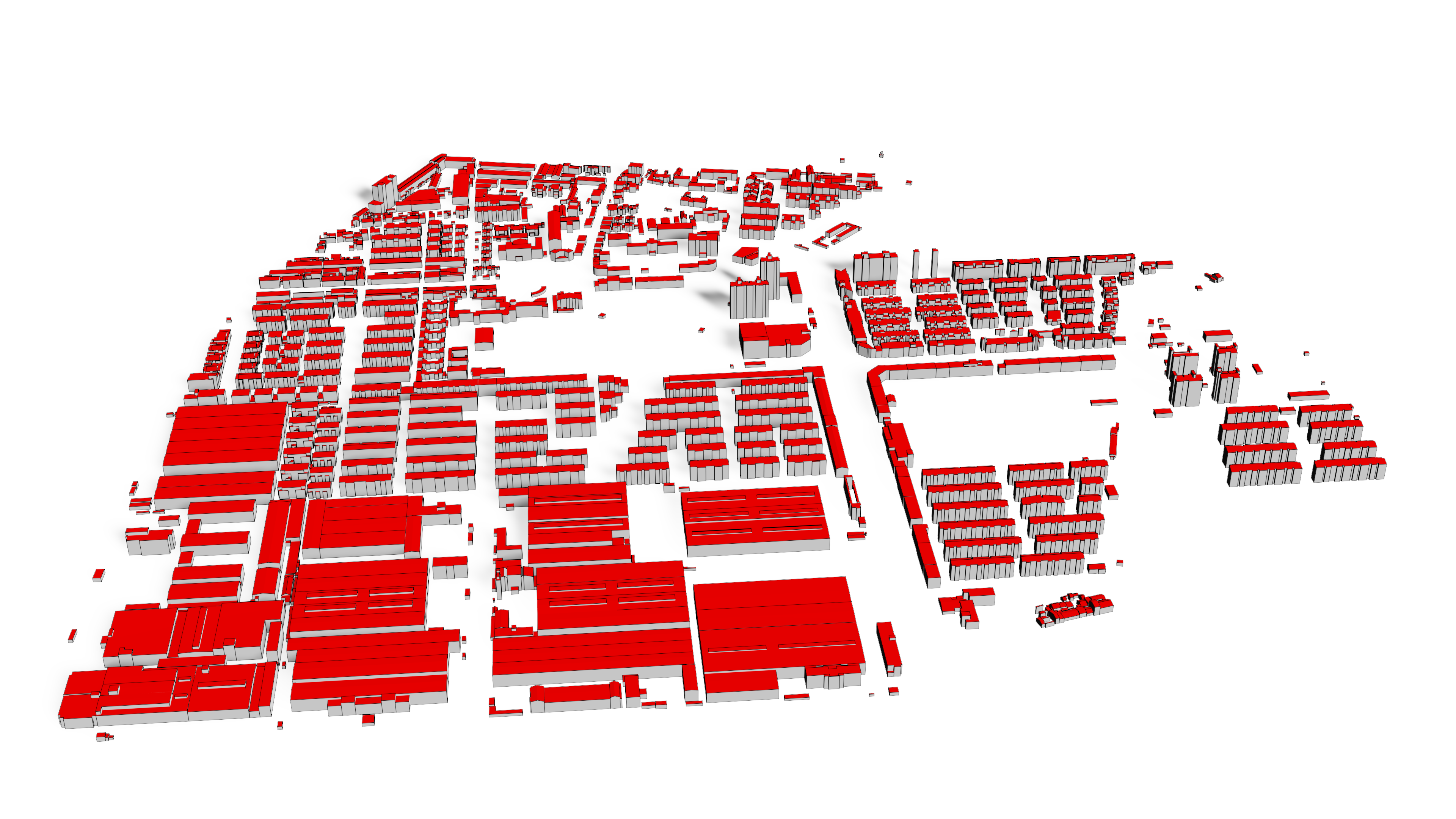

Methods for automated 3D urban modeling typically result in very dense point clouds or surface meshes derived from either overhead lidar or imagery (multiview stereo). Such models are very large and have no semantic separation of individual structures (i.e. buildings, bridges) from the terrain. Furthermore, such dense models often appear "melted" and do not capture sharp edges. This paper demonstrates an end-to-end system for segmenting buildings and bridges from terrain and estimating simple, low polygon, textured mesh models of these structures. The approach uses multiview-stereo satellite imagery as a starting point, but this work focuses on segmentation methods and regularized 3D surface extraction. Our work is evaluated on the IARPA CORE3D public data set using the associated ground truth and metrics. A web-based application deployed on AWS runs the algorithms and provides visualization of the results. Both the algorithms and web application are provided as open source software as a resource for further research or product development.

3D building models are digital models of urban areas that represent buildings, which have important role in urban planning and smart city. Their components are described and represented by corresponding 2D and 3D spatial data and geo-referenced data. Those models can be generated from stereo aerial images, satellite images or LiDAR point cloud. In this paper, we propose a geometric object based building reconstruction method. The paper is structured as three parts: the first part introduces our motivation and related work, the second part introduces the methodology and processes we used and the third part is about the test result. Results from the point clouds generated from WorldView high resolution satellite images are used to demonstrate the performance of our approach.

Material recognition methods use image context and local cues for pixel-wise classification. In many cases only a single image is available to make a material prediction. Image sequences, routinely acquired in applications such as mutliview stereo, can provide a sampling of the underlying reflectance functions that reveal pixel-level material attributes. We investigate multi-view material segmentation using two datasets generated for building material segmentation and scene material segmentation from the SpaceNet Challenge satellite image dataset. In this paper, we explore the impact of multi-angle reflectance information by introducing the \textit{reflectance residual encoding}, which captures both the multi-angle and multispectral information present in our datasets. The residuals are computed by differencing the sparse-sampled reflectance function with a dictionary of pre-defined dense-sampled reflectance functions. Our proposed reflectance residual features improves material segmentation performance when integrated into pixel-wise and semantic segmentation architectures. At test time, predictions from individual segmentations are combined through softmax fusion and refined by building segment voting. We demonstrate robust and accurate pixelwise segmentation results using the proposed material segmentation pipeline.

3D semantic labeling is a fundamental task in airborne laser scanning (ALS) point clouds processing. The complexity of observed scenes and the irregularity of point distributions make this task quite challenging. Existing methods rely on a large number of features for the LiDAR points and the interaction of neighboring points, but cannot exploit the potential of them. In this paper, a convolutional neural network (CNN) based method that extracts the high-level representation of features is used. A point-based feature image-generation method is proposed that transforms the 3D neighborhood features of a point into a 2D image. First, for each point in the ALS data, the local geometric features, global geometric features and full-waveform features of its neighboring points within a window are extracted and transformed into an image. Then, the feature images are treated as the input of a CNN model for a 3D semantic labeling task. Finally, to allow performance comparisons with existing approaches, we evaluate our framework on the publicly available datasets provided by the International Society for Photogrammetry and Remote Sensing Working Groups II/4 (ISPRS WG II/4) benchmark tests on 3D labeling. The experiment results achieve 82.3% overall accuracy, which is the best among all considered methods.

Object reconstruction from airborne LiDAR data is a hot topic in photogrammetry and remote sensing. Power fundamental infrastructure monitoring plays a vital role in power transmission safety. This paper proposes a heuristic reconstruction method for power pylons widely used in high voltage transmission systems from airborne LiDAR point cloud, which combines both data-driven and model-driven strategies. Structurally, a power pylon can be decomposed into two parts: the pylon body and head. The reconstruction procedure assembles two parts sequentially: firstly, the pylon body is reconstructed by a data-driven strategy, where a RANSAC-based algorithm is adopted to fit four principal legs; secondly, a model-driven strategy is used to reconstruct the pylon head with the aid of a predefined 3D head model library, where the pylon head’s type is recognized by a shape context algorithm, and their parameters are estimated by a Metropolis–Hastings sampler coupled with a Simulated annealing algorithm. The proposed method has two advantages: (1) optimal strategies are adopted to reconstruct different pylon parts, which are robust to noise and partially missing data; and (2) both the number of parameters and their search space are greatly reduced when estimating the head model’s parameters, as the body reconstruction results information about the original point cloud, and relationships between parameters are used in the pylon head reconstruction process. Experimental results show that the proposed method can efficiently reconstruct power pylons, and the average residual between the reconstructed models and the raw data was smaller than 0.3 m.

The identification and representation of building roof topology are basic, but important, issues for 3D building model reconstruction from point clouds. Always, the roof topology is expressed by the roof topology graph (RTG), which stores the plane–plane adjacencies as graph edges. As the decision of the graph edges is often based on local statistics between adjacent planes, topology errors can be easily produced because of noise, lack of data, and resulting errors in pre-processing steps. In this work, the hierarchical roof topology tree (HRTT) is proposed, instead of traditional RTG, to represent the topology relationships among different roof elements. Building primitives or child structures are taken as inside tree nodes; thus, the plane–model and model–model relations can be well described and further exploited. Integral constraints and extra verifying procedures can also be easily introduced to improve the topology quality. As for the basic plane-to-plane adjacencies, we no longer decide all connections at the same time, but rather we decide the robust ones preferentially. Those robust connections will separate the whole model into simpler components step-by-step and produce the basic semantic information for the identification of ambiguous ones. In this way, the effects from structures of minor importance or spurious ridges can be limited to the building locale, while the common features can be detected integrally. Experiments on various data show that the proposed method can obviously improve the topology quality and produce more precise results. Compared with the RTG-based method, two topology quality indices increase from 80.9% and 79.8% to 89.4% and 88.2% in the test area. The integral model quality indices at the pixel level and the plane level also achieve the precision of 90.3% and 84.7%, accordingly.

RANdom SAmple Consensus (RANSAC) is a widely adopted method for LiDAR point cloud segmentation because of its robustness to noise and outliers. However, RANSAC has a tendency to generate false segments consisting of points from several nearly coplanar surfaces. To address this problem, we formulate the weighted RANSAC approach for the purpose of point cloud segmentation. In our proposed solution, the hard threshold voting function which considers both the point-plane distance and the normal vector consistency is transformed into a soft threshold voting function based on two weight functions. To improve weighted RANSAC’s ability to distinguish planes, we designed the weight functions according to the difference in the error distribution between the proper and improper plane hypotheses, based on which an outlier suppression ratio was also defined. Using the ratio, a thorough comparison was conducted between these different weight functions to determine the best performing function. The selected weight function was then compared to the existing weighted RANSAC methods, the original RANSAC, and a representative region growing (RG) method. Experiments with two airborne LiDAR datasets of varying densities show that the various weighted methods can improve the segmentation quality differently, but the dedicated designed weight functions can significantly improve the segmentation accuracy and the topology correctness. Moreover, its robustness is much better when compared to the RG method.

In this work, we concentrate on the hierarchy and completeness of roof topology, and the aim is to avoid or correct the errors in roof topology. The hierarchy of topology is expressed by the hierarchical roof topology graph (HRTG) in accord with the definition of CityGML LOD (level of details). We decompose the roof topology graph (RTG) with a progressive approach while maintain the integrality and consistency of the data set simultaneously. Common feathers like collinear ridges or boundaries are calculated integrally to maintain their completeness. The roof items will only detected locally to decrease the error caused by data spare or mutual interference. Finally, a topology completeness test is adopted to detect and correct errors in roof topology, which results in a complete and hierarchical building model. Experiments shows that our methods have obvious improvements to the RTG based reconstruction method, especially for sparse data or roof topology with ambiguous.

Dr. Bo Xu

Room 6104, Faculty of Geosciences and Environmental Engineering

Southwest Jiaotong University

Xi'an Road, Gaoxin West District, Chengdu